This is the third of a Prose and Processors series on the explicit and implicit pedagogies embedded in various generative AI technologies. As I wrote in my overview of the series, I aim to consider what these platforms are trying to teach us, especially about writing, via their theoretical, practical, material, embodied, ethical, and ideological dimensions. Previous posts:

Alongside LLM chatbots like ChatGPT and Gemini, as well as educational technology applications like Packback and Grammarly, the last few years have seen the rise of AI-powered research tools like Research Rabbit, Elicit, and Consensus. These tools purport to amplify researchers’ ability to find relevant scholarly literature, often using exaggerated terms like “supercharge your workflows” (Research Rabbit) and “analyze papers at superhuman speed” (Elicit). It may seem odd to characterize these as pedagogical machines alongside Packback and Grammarly, but in truth, these platforms will teach our students (and us) research processes, and not always in desirable ways.

For most of my academic career, I have I searched for scholarly literature in databases (especially MLA International Bibliography, Google Scholar, and CompPile), perused reference lists for promising leads, happened upon books while skimming the stacks, and solicited recommendations from my scholarly network. I imagine most researchers do some combination of all four. Of these four, the only one AI companies are not attempting to replicate is shelf-skimming. Some platforms resemble scholarly databases, some visualize connected networks of references, all offer recommendations, and some even allow you to “chat” with a paper as if it were a colleague. These AI-driven capabilities can be a boon to researchers who must sift through an ever-increasing amount of literature amidst their own studies. However, these capabilities also risk replacing crucial elements of scholarly search, especially deep reading and serendipity, with speed.

Put differently, AI research tools teach us that we can and should spend less time making sense of existing scholarship.

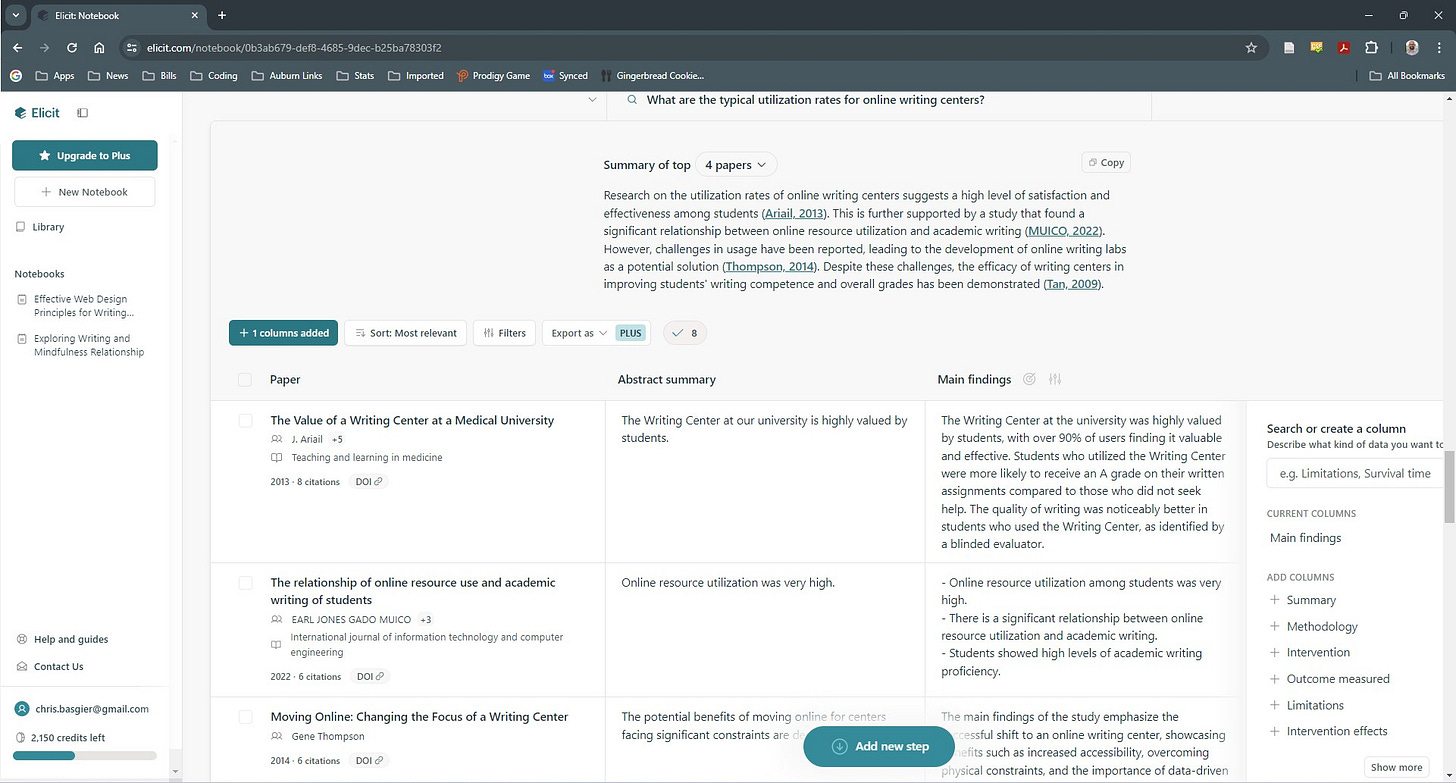

Elicit wears this goal on its sleeve. All I have to do is offer a research question, and it will summarize the top four results and generate a table that summarizes the abstract, methodology, outcomes, and other elements of study design, lickety split. This speed can mask potential inaccuracies and produce muddled explanations. For instance, when I asked Elicit about typical utilization rates for online writing centers, the first summary sentence stated, “Research on the utilization rates of online writing centers suggests a high level of satisfaction and effectiveness among students (Ariail, 2013).” However, Ariail’s piece is not about utilization at all, only satisfaction. The results thus conflate two different measures in order to shoehorn results into the original research question. It’s fast, but it’s not exactly best scholarly practice.

A screen shot of Elicit’s interface, including a summary of the top 4 results and an auto-generated table.

Because of this emphasis on speed, researchers, and especially novices, might not recognize muddled results because they will spend less time reading scholarly papers.

Some readers may object that all of us do plenty of skimming in our literature searches already. We read abstracts, scan tables and figures, and perhaps read key sentences or paragraphs (e.g., section beginnings and endings) to get a feel for the overall piece before deciding to dive in. Consensus promises to streamline this process via a one-sentence summary, the occasional genre tag (e.g., “Systematic Review”), and study elements similar to Elicit’s (e.g., population, sample size, methods, and outcomes), all of which one might identify on a quick skim. However, experts’ ability to skim for key scholarly elements is earned from many years of deeper reading, which helps us identify conceptual and discursive anchors. In the best case, Consensus anchors me to key terms and study elements that privilege a replicable, aggregable, and data-supported research paradigm—which isn’t inherently bad, but also isn’t pervasive. It cannot replace a closer read, only direct me to certain kinds of reading over others. I am not convinced it is qualitatively better (or even faster) than a standard literature search on a scholarly database.

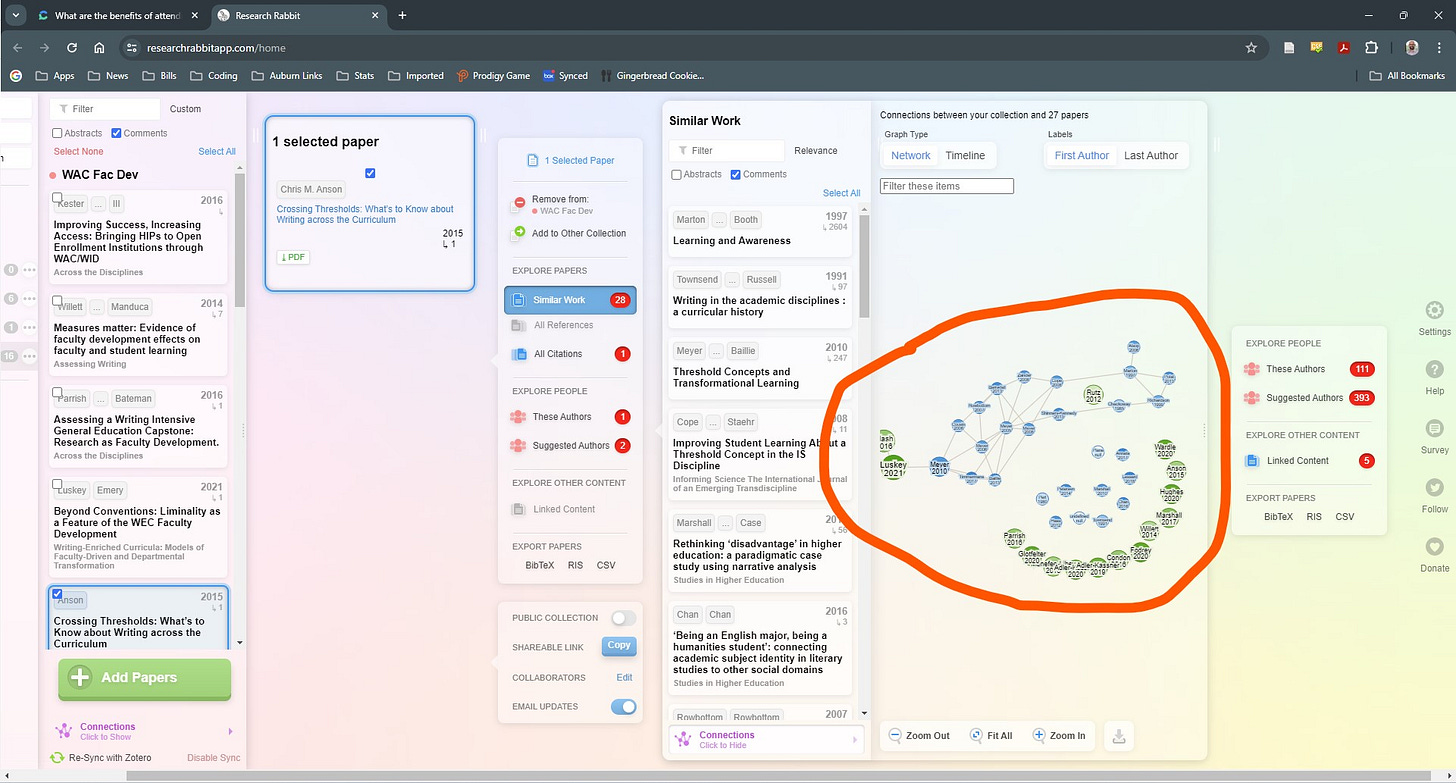

The same goes for Research Rabbit, whose core function is to visualize citation networks. The company likens itself to music services like Spotify, in that the app, in their own words, “learns what you love” and offers recommendations accordingly. Citation mapping can be a valuable methodology for understanding contexts and trends in a given field. However, this specific tool is limited by its source material. To test it out, I loaded a Zotero library for a recent article I published on faculty development in writing across the curriculum. Importantly, my article also uses citation mapping, albeit not in Research Rabbit.

I then selected the most-cited piece (according to my research, not this tool), which was by Chris Anson, and selected similar work. The image below represents the results. Blue items were not in my original list. Green items were. Notice that Research Rabbit mostly does not recognize connections among my original citations, even though many cite one another. In other words, this tool cannot provide the contextual knowledge necessary for understanding the relationships among citation networks if those connections are not already present in its database. It should not be a sole source of citation network information.

A screen shot of Research Rabbit. Notice how few of the green references actually link to one another, despite my research that actually maps their connections.

That doesn’t mean it’s useless. A user might very well discover new literature from it. But that user will also need deep immersion in the field to understand the significance of what they find. These AI research platforms (especially Elicit and Consensus) suggest that that deep immersion is not necessary for understanding the field.

However, deep immersion is crucial for sense-making and is tied intimately to effective, discipline-specific writing. The more researchers read in their fields, the more they can begin to internalize the discursive conventions of those fields. In a worst-case AI-driven research ecosystem, scholars won’t spend enough time reading scholarship to internalize those conventions. They may have to rely on AI to discover citation networks, extract ideas from papers, and produce discursive features that editors, reviewers, and disciplinary experts expect to see. Lack of immersion in the discourse of the field, initiated by AI scholarly search, may thus contribute to an accelrated need for AI to produce discipline-specific prose with clearly articulated ideas appropriate for scholarly domains with rich theory, highly technical language, or assumed paradigms that shape thinking in often-unacknolwedged ways.

That’s a tall order for LLM technologies built on numerically-produced language patterns rather than on rhetorically rich, contextually embedded discursive knowledge. Until that technology arrives, it behooves us to teach our students far-reaching research practices involved in search and discovery, rather than opt for a tool-first mentality in the service of speed. In doing so, we can teach them to make sense of scholarship, and perhaps to stave off the need for ever more tech in the scholarly process.